Dataflow Architecture

Maximizing inference performance through careful architecture design

Standard compute architectures tackle sequential algorithms by using a massively powerful and power-intensive CPU core, surrounding it with a memory architecture that matches the memory profile of those applications. AI inference is not a typical sequential application; it is a graph-based application where the output of one graph node flows to the input of other graph nodes.

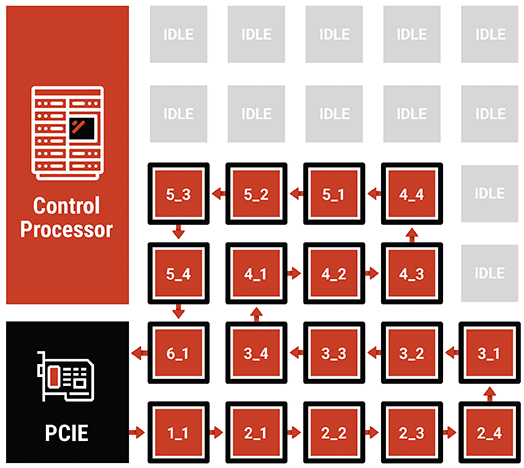

Graph applications provide opportunities to extract parallelism by assigning a different compute element to each graph node. Completed results from one graph node, flow to the next graph node for the next operation, which is ideal for dataflow architecture. In our dataflow architecture, we assign a graph node to each compute-in-memory array and put the weight data for that graph node into that memory array. When the input data for that graph node is ready, it flows to the correct location adjacent to the memory array, the local compute and memory then executes upon it.. Many inference applications use operations like convolution, which processes bits of the image frame instead of the whole frame simultaneously.

Our dataflow architecture maximizes inference performance through parallel operation of compute-in-memory elements. This pipelines image processing by processing neural network nodes (or “layers”) in parallel in different parts of the frame. Built from the ground up as a dataflow architecture, the Mythic architecture minimizes the memory and computational overhead required to manage the dependency graphs needed for dataflow computing, and keeps the application operating at maximum performance.